Intelligent

Vehicles that Can See

Autonomous Driving based on AI

Deep Learning of Road Profiles from Driving Videos

Driving video is available from

in-car camera for road detection and collision avoidance. However, consecutive

video frames in a large volume have redundant scene coverage during vehicle

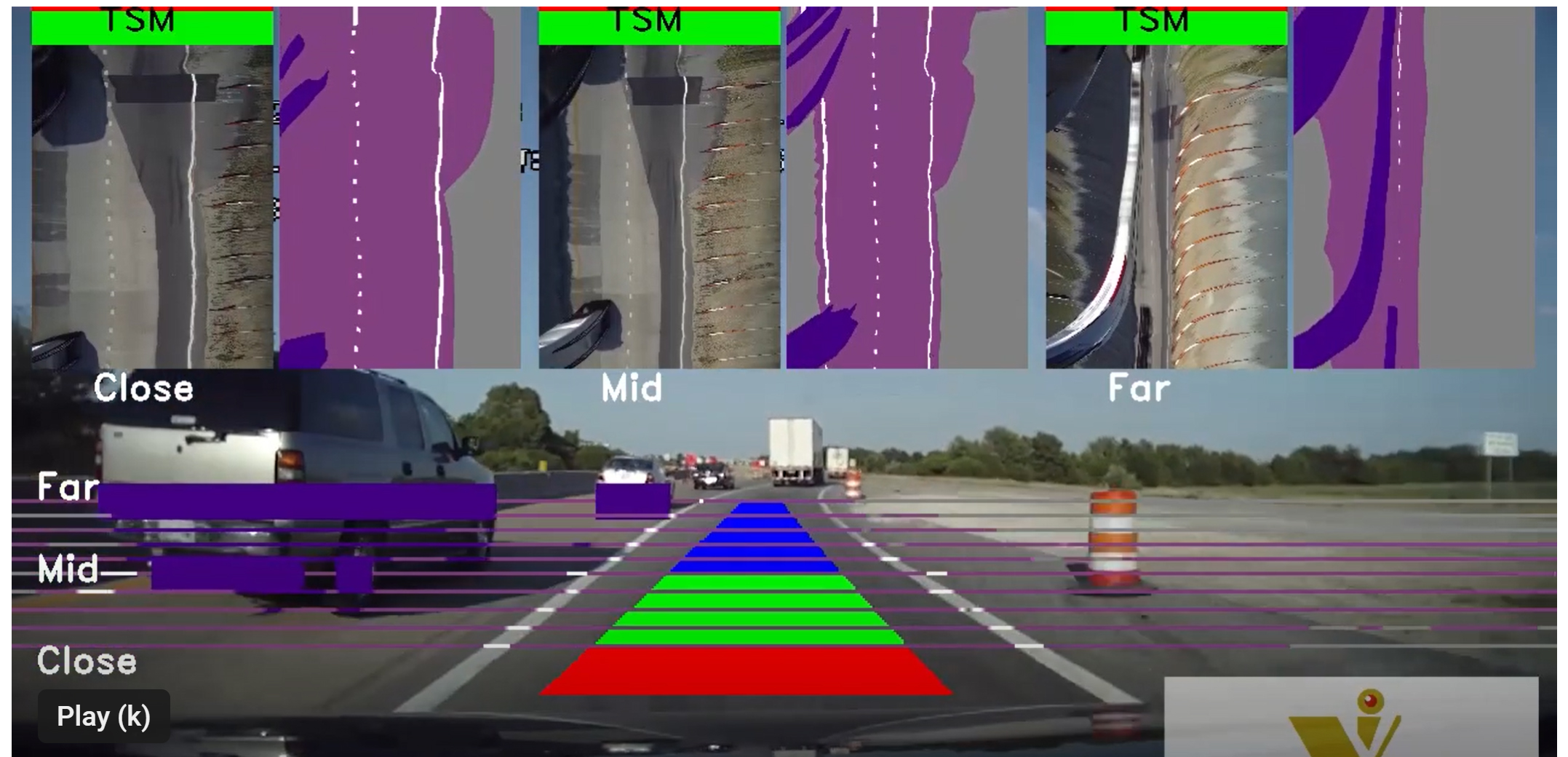

motion, which hampers real-time perception in autonomous driving. This work

utilizes compact road profiles (RP) and motion profiles (MP) to identify path

regions and dynamic objects, which drastically reduces video data to a lower

dimension and increases sensing rate. To avoid collision in a close range and

navigate a vehicle in middle and far ranges, several RP/MPs are scanned

continuously from different depths for vehicle path planning. We train deep

network to implement semantic segmentation of RP in the spatial-temporal

domain, in which we further propose a temporally shifting memory for online testing.

It sequentially segments every incoming line without latency by referring to a

temporal window. In streaming-mode, our method generates real-time output of

road, roadsides, vehicles, pedestrians, etc. at discrete depths for path

planning and speed control. We have experimented our method on naturalistic

driving videos under various weather and illumination conditions. It reached

the highest efficiency with the least amount of data.

Driving video is available from

in-car camera for road detection and collision avoidance. However, consecutive

video frames in a large volume have redundant scene coverage during vehicle

motion, which hampers real-time perception in autonomous driving. This work

utilizes compact road profiles (RP) and motion profiles (MP) to identify path

regions and dynamic objects, which drastically reduces video data to a lower

dimension and increases sensing rate. To avoid collision in a close range and

navigate a vehicle in middle and far ranges, several RP/MPs are scanned

continuously from different depths for vehicle path planning. We train deep

network to implement semantic segmentation of RP in the spatial-temporal

domain, in which we further propose a temporally shifting memory for online testing.

It sequentially segments every incoming line without latency by referring to a

temporal window. In streaming-mode, our method generates real-time output of

road, roadsides, vehicles, pedestrians, etc. at discrete depths for path

planning and speed control. We have experimented our method on naturalistic

driving videos under various weather and illumination conditions. It reached

the highest efficiency with the least amount of data.

Publications:

G. Cheng, J. Y. Zheng, “Sequential Semantic

Segmentation of Road Profiles for Path and Speed Planning”, IEEE Transaction on Intelligent Transportation Systems, pp. 1-14, 2022. (Video)

G

Cheng, JY Zheng, M Kilicarslan, Semantic

segmentation of road profiles for efficient sensing in autonomous driving, 2019 IEEE

Intelligent Vehicles Symposium (IV), 564-569.

Dataset:

Driving Video Profile (DVP) dataset: www.cs.iupui.edu/~jzheng/drivingVideoProfile1.htm

Data Mining of Naturalistic

Driving Video (partly supported by Dept. of Transportation, and NIST.DOC)

Nowadays, many vehicles are equipped with a

vehicle borne camera system for monitoring drivers’ behavior, accident

investigation, road environment assessment, and vehicle safety design. Huge

amount of video data is being recorded daily. Analyzing and interpreting these

data in an efficient way has become a non-trivial task. As an index of video

for quick browsing, this work maps the video into a temporal image of reduced

dimension with as much intrinsic information as possible observed on the road.

The perspective projection video is converted to a top-view temporal profile

that has precise time, motion, and event information during the vehicle

driving. Then, we attempt to interpret dynamic events and environment around

the vehicle in such a continuous and compact temporal profile. The reduced

dimension of the temporal profile allows us to browse the video intuitively and

efficiently.

Publications:

Z. Wang, J. Y.

Zheng, Z. Gao, Detecting Vehicle Interactions in Driving Videos via Motion

Profiles, IEEE Intelligent Transportation System Conference, 2020, 1-6.

K. Kolcheck, Z.

Wang, H. Xu, J. Y. Zheng, Visual Counting of Traffic Flow from a Car via

Vehicle Detection and Motion Analysis, Asian Conference on Pattern Recognition,

2019, 529-543. (Best Paper Award on Road and Safety).

G. Cheng, J. Y. Zheng, H. Murase, Sparse

Coding of weather and illuminations for ADAS and autonomous driving, IEEE

Intelligent Vehicles, 2018, 1-6.

Guo Cheng, Z. Wang, J. Y. Zheng,

Modeling weather and illuminations in driving views based on big-video mining,

IEEE Trans. Intelligent Vehicles, 3(4), 522-533, 2018.

Mehmet

Kilicarslan, Jiang Yu Zheng, Visualizing Driving Video in Temporal Profile, IEEE

Intelligent Vehicle Symposim, 2014, 1-7.

Dataset:

J.Y. Zheng,

IUPUI Driving Videos and Images in All Weather and Illumination Conditions, CDVL Tech Memo, https://arxiv.org/abs/2104.08657

Pedestrian Detection from Motion

in Driving Video

Pedestrian detection has been

intensively studied based on appearances for driving safety. Only a few works

have explored between-frame optical flow as one of features for human

classification. In this paper, however, a new point of view is taken to watch a

longer period for non-smooth movement. We explore the pedestrian detection

purely based on motion, which is common and intrinsic for all pedestrians

regardless of their shape, color, background, etc. We found unique motion

characteristics of humans different from rigid object motion caused by vehicle

motion.

Publications:

M. Kilicarslan

and J. Y. Zheng, "DeepStep: Direct Detection of Walking Pedestrian From

Motion by a Vehicle Camera," in IEEE Transactions on Intelligent

Vehicles, 2022, doi:

10.1109/TIV.2022.3186962. (video)

G. Cheng, J. Y.

Zheng, Semantic Segmentation for Pedestrian Detection from Motion in Temporal

Domain, International Conference on Pattern Recognition 2020, 1-7. (Video)

M. D. Sulistiyo, Y. Kawanishi, D. Deguchi, I.

Ide, T. Hirayama, J. Y. Zheng, H. Murase, Attribute-Aware Loss Function for Accurate Semantic

Segmentation Considering the Pedestrian Orientations, IEICE

Trans. on Fundamentals of Electronics Communications and Computer

Sciences E103.A(1): 231-242, 2020.

M. D. Sulpizio, Y. Kawanishi, D.

Deguchi, T. Hirayama, I. Ide, J. Y. Zheng, H. Murase, Attribute-aware semantic

segmentation of road scenes for understanding pedestrian orientations, IEEE

Intelligent Transportation Systems Conference, 2018, 1-6.

M. Kilicarslan, J. Y. Zheng, K.

Raptis, Pedestrian detection from motion, 23th International Conference on

Pattern Recognition, 1857-1863, 2016. (video)

Mehmet Kilicarslan, Jiang Yu

Zheng, Aied Algarni, Pedestrian detection from non-smooth motion, IEEE

Intelligent Vehicles Symposium (IV), 2015, pp. 487-492

Mehmet Kilicarslan, Jiang Yu

Zheng, Detecting walking pedestrians from leg motion in driving video, 2014

IEEE 17th International Conference on Intelligent Transportation Systems

(ITSC), pp. 2924-2929

Bicyclists Detection in Driving Video

(supported by Toyota)

Monocular camera based bicyclist detection

in naturalistic driving video is a very challenging problem due to the high

variance of the bicyclist appearance and complex background of naturalistic

driving environment. In this paper, we propose a two-stage multi-modal

bicyclist detection scheme to efficiently detect bicyclists with varied poses

for further behavior analysis. A new motion based region of interest (ROI)

detection is first applied to the entire video to refine the region for

sliding-window detection. Then an efficient integral feature based detector is

applied to quickly filter out the negative windows. Finally, the remaining

candidate windows are encoded and tested by three pre-learned pose-specific

detectors. The experimental results on our TASI 110 car naturalistic driving dataset

show the effectiveness and efficiency of the proposed method. The proposed

method outperforms the traditional methods.

Publications:

C. Liu, R. Fujishiro, L.

Christopher, J. Y. Zheng, Vehicle-bicyclist dynamic position extracted from

naturalistic driving videos, IEEE Transactions on Intelligent Transportation

Systems, 18(4), 734-742, 2017.

Kai Yang, Chao Liu, Jiang Yu

Zheng, Lauren Christopher, Yaobin Chen, Bicyclist detection in large scale

naturalistic driving video, 2014 IEEE 17th International Conference on

Intelligent Transportation Systems (ITSC), 2015, pp. 1638-1643.

Vehicle Collision Alert based on

Visual Motion

This work models various

dangerous situations that may happen to a driving vehicle on road in

probability, and determines how such events are mapped to the visual field of

the camera. Depending on the motion flows detected in the camera, our algorithm

will identify the potential dangers and compute the time to collision for

alarming. The identification of dangerous events is based on the

location-specific motion information modeled in the likelihood probability

distributions. The originality of the proposed approach is at the location

dependent motion modeling using the knowledge of road environment. This will

link the detected motion to the potential danger directly for accident

avoidance. The mechanism from visual motion to the dangerous events omits the

complex shape recognition so that the system can response without delay.

Publications:

M. Kilicarslan, J. Y. Zheng, Predict collision by TTC

from motion using single camera, IEEE Trans. Intelligent Transportation Systems, 20(2), 522-533, 2019.

(video)

M.

Kilicarslan, J. Y. Zheng, Direct vehicle collision detection from motion in

driving video, IEEE Intelligent Vehicles, 2017, 1558-1564.

M.

Kilicarslan, J. Y. Zheng, Bridge motion to collision alarming using driving

video, 23th International Conference on Pattern Recognition, 1870-1875, 2016.

Mehmet Kilicarslan, Jiang Yu Zheng, Modeling Potential Dangers in Car Video for Collision

Alarming, IEEE International Conference on Vehicle Electronics and

safety 2012, 1-6

Mehmet Kilicarslan, Jiang Yu Zheng, Towards Collision Alarming

based on Visual Motion. IEEE International Conference on Intelligent

Transportation Systems 2012: 1-6

A. Jazayeri, H. Cai, J. Y. Zheng,

M. Tuceryan, Vehicle Detection and Tracking in Car

Video Based on Motion Model, IEEE Transaction on Intelligent

Transportation Systems, 12(2), 583-595, 2011.

Road Appearances and Edge

Detection in All-weathers and Illuminations (partly supported by Toyota)

To avoid vehicle running off road, road edge

detection is a fundamental function. Current work on road edge detection has

not exhaustively tackled all weather and illumination conditions. We first sort

the visual appearance of roads based on physical and optical properties under

various illuminations. Then, data mining approach is applied to a large driving

video set that contains the full spectrum of seasons and weathers to learn the

statistical distribution of road edge appearances. The obtained parameters of

road environment in color on road structure are used to classify weather in

video briefly, and the corresponding algorithm and features are applied for

robust road edge detection. To visualize the road appearance as well as

evaluate the accuracy of detected road, a compact road profile image is generated

to reduce the data to a small fraction of video. Through the exhaustive

examination of all weather and illuminations, our road detection methods can

locate road edges in good weather, reduce errors in dark illuminations, and

report road invisibility in poor illuminations.

Publications:

G. Cheng, J. Y. Zheng, M.

Kilicarslan, Semantic Segmentation of road profiles for efficient sensing in

autonomous driving, IEEE Intelligent Vehicle Symposium 2019, 1-6.

Z. Wang, G. Cheng, J. Y. Zheng,

Road edge detection in all weather and illumination via driving video mining,

IEEE Trans. Intelligent Vehicles, 4(2), 232-243, March 2019.

Guo Cheng, Z. Wang, J. Y. Zheng,

Modeling weather and illuminations in driving views based on big-video mining,

IEEE Trans. Intelligent Vehicles, 3(4), 522-533, 2018.

Z.

Wang, G. Cheng, J. Y. Zheng, All weather road edge identification based on

driving video mining. IEEE Intelligent Transportation Systems Conference, 2017.

G.

Cheng, Z. Wang, J. Y. Zheng, Big-video mining of road appearances in full

spectrums of weather and illuminations, IEEE Intelligent Transportation Systems

Conference, 2017.

Night Road and Illuminations

(Supported by Toyota)

Pedestrian Automatic Emergency

Braking (PAEB) for helping avoiding/mitigating pedestrian crashes has been

equipped on some passenger vehicles. Since approximately 70% pedestrian crashes

occur in dark conditions, one of the important components in the PAEB

evaluation is the development of standard testing at night. The test facility

should include representative low-illuminance environment to enable the

examination of the sensing and control functions of different PAEB systems. The

goal of this research is to characterize and model light source distributions

and variations in the low-illuminance environment and determine possible ways

to reconstruct such an environment for PAEB evaluation. This paper describes a

general method to collect light sources and illuminance information by

processing large amount of potential collision locations at night from naturalistic

driving video data. This study was conducted in four steps. (1) Gather night

driving video collected from Transportation Active Safety Institute (TASI) 110

car naturalistic driving study, particularly emphasizing locations with

potential pedestrian collision. (2) Generate temporal video profile as a

compact index toward large volumes of video, (3) Identify light fixtures by

removing dynamic vehicle head lighting in the profile and stamp them with their

Global Positioning System (GPS) coordinates. (4) Find the average distribution

and intensity of illuminants by grouping lighting component information around

the potential collision locations. The resulting lighting model and setting can

be used for lighting reconstruction at PAEB testing site.

Publications:

Libo Dong, Stanley Chien,

Jiang-Yu Zheng, Yaobin Chen, Rini Sherony, Hiroyuki Takahashi, Modeling of Low

Illuminance Road Lighting Condition Using Road Temporal Profile, SAE Technical

Paper, 2016, pp. 1638-1643

Railway Online

A patrol type of

surveillance has been performed everywhere from police city patrol to railway

inspection. Different from static cameras or sensors distributed in a space,

such surveillance has its benefits of low cost, long distance, and efficiency

in detecting infrequent changes. However, the challenges are how to archive

daily recorded videos in the limited storage space and how to build a visual

representation for quick and convenient access to the archived videos. We

tackle the problems by acquiring and visualizing route panoramas of rail

scenes. We analyze the relation between train motion and the video sampling and

the constraints such as resolution, motion blur and stationary blur etc. to

obtain a desirable panoramic image. The route panorama generated is a continuous

image with complete and non-redundant scene coverage and compact data size,

which can be easily streamed over the network for fast access, maneuver, and

automatic retrieval in railway environment monitoring. Then, we visualize the

railway scene based on the route panorama rendering for interactive navigation,

inspection, and scene indexing.

Publications:

S. Wang, S. Luo, Y. Huang, J. Y. Zheng, P. Dai, Q. Han, Railroad

online: acquiring and visualizing route panoramas of railway scenes. The Visual

Computer 30(9), 1045-1057, 2014.

S. Wang, S. Luo, Y. Huang, J.Y.

Zheng, P. Dai, Q. Han, Rendering railway scenes in Cyberspace based on route

panoramas, International Conference on Cyberworlds (CW), 12-19, 2013.

S. Wang, J. Y. Zheng, Y. Huang, S. Luo, Route panorama

acquisition and rendering for high-speed railway monitoring, IEEE International

Conference on Multimedia and Exposition, 1-6, 2013.